In the previous part of the article we discovered that what many developers associate the WebAssembly it’s just an MVP.

After we learned how the process of defining a new feature looks like.

Now let’s see what the working group is preparing for us!

Runtime performance 🚀

There are many upcoming improvements in terms of runtime performance of WebAssembly. These feature focus on taking the maximum advantage of modern hardware which powers many machines nowadays.

Multi threading

The first cool thing will be multi threading support. As you probably already known JavaScript is a single threaded language (if we don’t consider web workers) and so it’s WebAssembly.

As mentioned in the first part, very complex desktop applications have already been successfully ported to the browser. They’re working correctly, but not at their maximum power yet. That’s because they can’t take advantage of the modern multi cores architectures that our CPUs often have.

Good news, the support for multithreading programming in web assembly is already at a good stage! A proposed text is available.

Browser have already started to work on some testing implementations. Chrome has already a way to test wasm threads behind a flag while the support activation for other browsers depends on the reactivation of JS SharedArrayBuffers which were deactivated after the spectre issue was found. But they should be re-enabled soon so finger crossed 🤞!

Examples

A cool app which just got shipped to the browser is Google Earth. The team behind it took already advantage of the performance improvements that Wasm threads can provide. Threads allow to run heavy calculations without affecting negatively the frame rate.

(Here’s a video of a no-thread VS thread performance comparison)

SIMD

Another cool improvement regarding performance will be the SIMD support. SIMD stands for Single Instruction Multiple Data and it will allow Wasm applications to perform the same operation on multiple data points simultaneously.

The feature is still under very active development and won’t probably be shipped in the very near future though 😕.

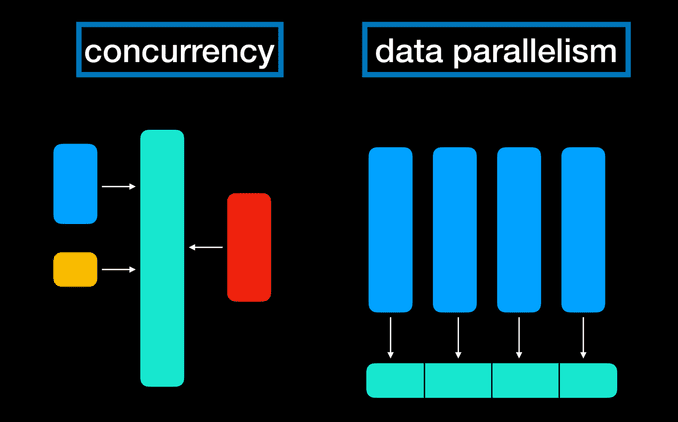

If you, like me, are not immediately getting how these features affect the execution of a program, let’s take 1 minute to discuss the difference between concurrency (or task parallelism) and data parallelism.

Concurrency is about distributing tasks among processors, while, as you can guess, data parallelism is about distributing data/memory among processors. In these diagrams the green bars represent the data, while the colored one the tasks. As you can notice in data parallelism the same task is applied to different chunk of data in parallel.

Both of these features will allow important improvements in terms of performance to WebAssembly applications.

64 bit

Another feature which will allow further performance improvements is the support for 64bit addressing.

Not so much to say on this topic, 64bit addressing will remove any artificial limitation on linear memory address space and wasm programs will theoretically have access to an unlimited amount of memory.

In terms of development, the feature is still in pre-proposal stage but it looks like the working group has quite a clear plan on how to implement it.

Language features

Let’s now talk about high-level language features implementation. This will unlock the development of two more kind of software:

- Frameworks

- And compile to JS languages, like scala-js for instance. These languages could compile to web assembly now instead

In order to activate these kind of software to be written in WebAssembly, we need support for several high-level language features, like:

- garbage collector

- exception handling

- better debugging

Garbage collector

Right now WebAssembly doesn’t have a direct access to the broswer’s garbage collector but this will be needed if we want to unlock some kind of memory management optimizations.

Lin Clark provides a good example for how this feature could be used. Imagine you want to reimplement a virtual DOM in WebAssembly, internally it would use a direct memory allocation to optimize performances. But the diffing algorithm would be strictly linked to the JS objects that it needs to evaluate which the browser couldn’t clean up by hitself because they would be used by WebAssembly.

We need to things to have a proper GC in webassembly:

Therefore the status for this feature is still feature proposal and it will still take some time in order to have it available.

Exception handling

Another very important feature which right now it’s not available is native exceptions handling.

These kind of exceptions are currently disabled by default when compiling a WebAssembly app, there’s a polyfill available but it’s heavy and will slow down your app runtime performance.

Anyway, even if you write a Wasm program without exceptions there are still cases when errors may be triggered by some JS code which is called by WASM, and right now there’s not a proper way to deal with that.

Debugging

Last but not least: debugging.

Browser dev tools for JS are great, it would be awesome to have something similar for WebAssembly.

There’s some work in progress for this and ideally in the near future you could be able to inspect your native code directly from the browser console.

Loadtime performance ⏳

Let’s now move to another aspect of the webassembly which is under a continuous improvement process. Loadtime performance, or in how much time a .wasm file is ready to be run in the browser.

Note that these kinds of improvements depend more on the browsers way of handling wasm programs than the language specs themselves.

You might be wondering why do we even need some specific optimization for WebAssembly, since the files are delivered and used in the same way as JS.

Well, at first let’s consider the fact that WebAssembly apps tend to be very large and at second the format of Wasm programs allow for certain optimizations which would be impossible to do in JS.

- Wasm apps are large.

- They provide a predictable performance.

The second point is possible because these apps are fully compiled ahead of time. A process which impacts negatively the startup time, in exchange for a more performant and predictable runtime.

Streaming compilation

Streaming compilation is about compiling a WebAssembly file while it’s still downloading. And Wasm was designed explicitly for that, It’s technically possible to compile WebAssembly line by line.

How does streaming compilation affect the application startup time?

A file is usually not downloaded as a whole thing but it’s delivered through the network in chunks

In the gif above, we can see that without streaming compilation a Wasm resource is treated as any JS resource, we need to wait for the entire file to be downloaded before starting the compiling.

This behaviour keeps the compiler idle while downloading the file when it could already start some work.

Wasm is built to be compiled line by line, and by doing so as soon as the file is downloaded a compiled version of it is already ready to be run.

Some browsers have already shipped some streaming compilation support:

Browsers like chrome and firefox are compiling the wasm file so fast that by the time the file is downloaded compilation is done too.

Tiered compilation

Keep talking about compilation, another improvement browser have kept working on after the MVP is having multiple layers of compilation, with multiple compilers acting at different levels.

Why would we want to do so?

Well, in order to make the compiled code run as fast as possible the browsers need to apply some optimizations while compiling and these optimizations have a cost. With a tiered compilation stack you can have these optimizations applied without slowing down the boot time.

A basic compiler will take care of a first not-so-much optimized compilation which will allow the program to start the execution asap, then while executing an optimizing compiler re-compiles the code to a more performant output and replaces the compiled program when ready

Good news here too! the feature has been already shipped in many major browsers!

The most popular browsers on the market, they all provide a certain kind of tiered compilation for webassembly. I won’t enter in the details but at these links you can find some more details directly from the people building the compilers.

Implicit HTTP caching

In order to take advantage of WebAssembly predictable compilation output, browsers will start to use the already compiled machine code instead of the .wasm file.

Wasm and JS modules

Calls between js and wasm environments

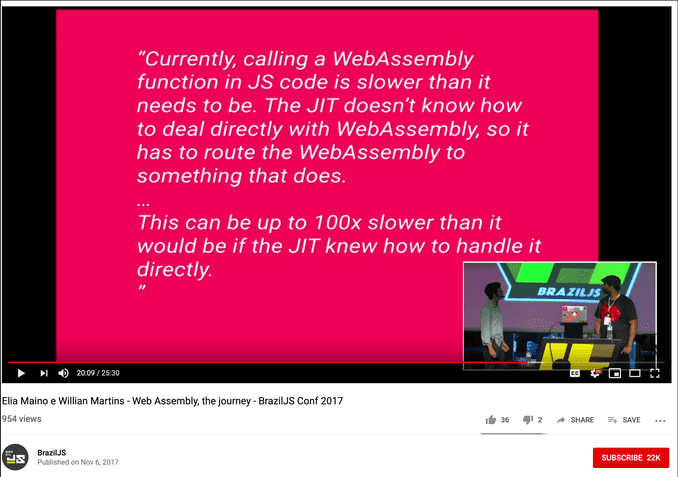

One thing that keeps getting improved is the speed of the calls between the JS and the WASM environments. These calls used to be slow but now they’re becoming faster and faster, removing what was a bottleneck for Wasm modules in the beginning.

See how Mozilla improved that.

In the talk I gave at BrazilJS in 2017 this slow tradeoff was what was making the usage of a .wasm script less performant than an implementation using web workers:

It’s amazing to see how in less than 2 years a major performance issue like that it’s not a problem anymore!

Data exchange between js and wasm environments

Webassembly only understands numbers, and this makes the communication between Wasm modules and JS ones a little hard since JS apps are based on complex data types.

There are some proposals to allow JS and Wasm to communicate directly using objects and when this will ship Wasm modules apis will become easier to use for sure.

ES module integration

Wasm modules are currently instantiated using an imperative api. You call some functions and get back a module. By doing that the Wasm module is not really part of the js module graph.

That’s why we need a way to have an ES module integration.

Something like:

<script type="module" src="./module.wasm" />There’s a proposal for that too.

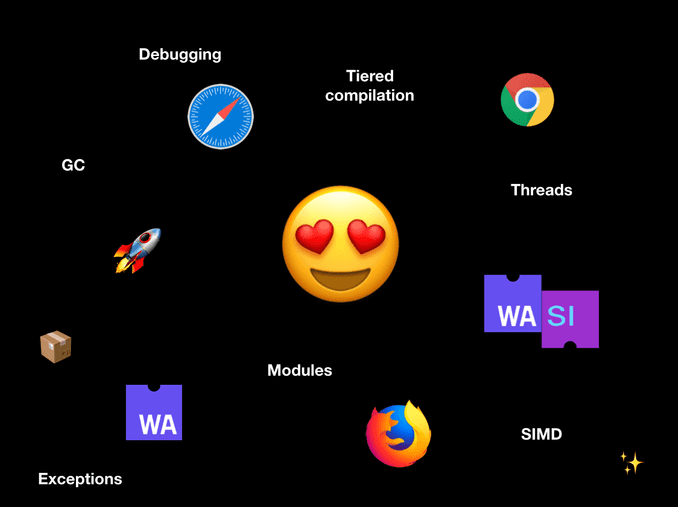

WebAssembly: so much going on

As you could see there’s so much to be excited about in the WebAssembly world.

It’s a vibrant environment which has just started to be alive and we’re lucky enough to be able to play with it and influence how it will evolve.

Thanks for reading 🙂